Understanding the Concept of Containerization : Introduction to Docker (Module 1) .

Make your applications scalable and highly compatible with containers.

The concept of containers have become widely adopted in software development, they have proven to offer multiple advantages in compatibility and consistency of software.

It has almost become a compulsory skill for Software Engineers to master in order to work effectively. Its very important to grasp the concept of containers before diving head on into usage. If that sounds good to you then keep reading.

"The Why"

Docker was introduced as a tool to package applications together with required dependencies in a consistent environment for production and deployment. This tool was introduced as a result of the problems faced with the traditional methods of deployment.

Here are two most common problems faced:

Dependency Issues : Most applications require multiple dependencies and varying versions, managing these across local and deployment environments can cause errors and be really stressful.

Environment Inconsistency : A good example is a group of 4 members working on the same project but using different operating systems (Eg: windows, mac, Linux). They would face compatibility problems as dependencies and frameworks work in certain ways for different environments.

A more obvious example is pulling a Node.js API that was setup with version 20 to a local machine running version 18 from GitHub. This would prove problematic for dependencies that require a minimum of version 20 to work, you would have to spend extra time working installing the right versions on your local machine.

What is a Container

A container is a lightweight, standalone and executable software package that isolates any application with all of its dependencies and runtime environment including libraries and system tools into a single portable unit. As the name container implies, it simply covers your application in an isolated environment that enables it run efficiently eliminating the problems mentioned earlier above.

"The How"

It is important to understand that containers work with the host system kernel in order to provide isolation. The system kernel is a core part of operating systems that serves as an intermediary between software and hardware. The kernel gives the containers native access to the systems hardware resources and this enables use of direct access of container runtimes to manage these resources thereby making them more resource efficient and worthy of the title "lightweight".

Containerization is the process of packaging and running applications in a consistent and isolated environment like a container. This improves deployment and scalability marginally. Multiple containers can be run on one system all isolated from each other.

"A very good analogy to describe it is packing a child's lunch for school, assume that the lunch box is the container then all items in it are various components of the application, this ensures the child's lunch is isolated from that of the other kids and maintains a consistent state till its time to eat and this just same for the containers."

Are Containers the same as virtual machines???

| Containers | Virtual Machines |

| DevOps integration and deployment (CI/CD) | More Resource usage |

| Micro services Architecture | For running old Legacy systems |

| Scalable Web Services | Multi tier Applications |

Containers may seem a bit similar to VMs by concept, but are not the same: Containers are used mainly when deployment, scalability and are preferred for microservice architectures. They are less resource intensive and can enable a host system run multiple instances at once.

Virtual Machines are more resource intensive, are used for running old legacy systems with full isolation and when more security is required. A virtual machine emulates a whole host system including hardware and software. Running multiple virtual machines on one host system is usually discouraged due to the amount of resources they consume.

What is Docker?

Docker is simply an open source tool that delivers software in packages called containers. It is a tool used to perform containerization to software, it is the most popular among others used.

Benefits

Consistency: Docker ensures consistency in software environments, developers can package their applications and dependencies into containers.

Isolation: Each container runs in its own isolated environment, this keeps application independent from each other.

Portability: Containers can run consistently across different platforms, including local development machines, on-premises servers, and cloud infrastructure.

Resource Efficiency: Docker containers are lightweight and consume minimal resources compared to traditional virtual machines.

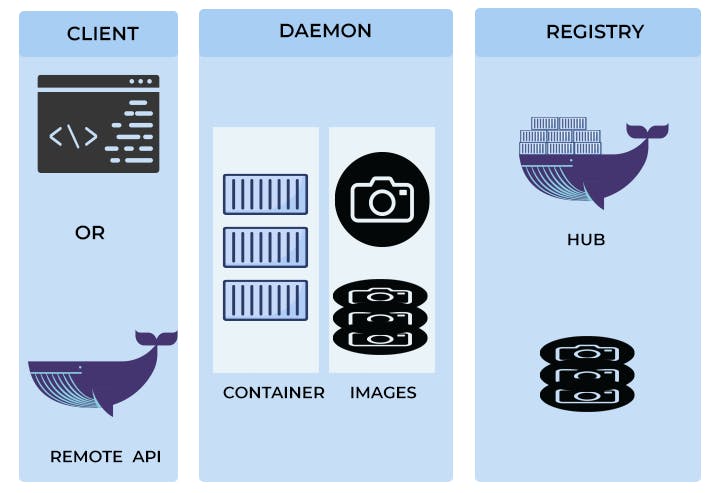

Key Components

Docker consists of key components that enable containerization and streamline the process of building, shipping, and running applications.

-

It creates and manages containers on the host system. It includes the Docker daemon, which runs in the background and manages container lifecycle operations, and the Docker CLI (command line interface) for interacting with Docker.

-

Docker Image is a read-only template used to create containers. It contains the application code, libraries, dependencies, and other files needed to run the application. Docker images are built from Dockerfiles and can be shared and reused across different environments. Images are stored in a registry, such as Docker Hub.

-

Docker Container is a lightweight, runnable instance of a Docker image. Containers encapsulate applications and their dependencies, providing a consistent runtime environment for running applications.

-

Dockerfile is a modifiable text file that contains instructions for building a Docker image. It specifies the base version, dependencies and other configuration settings needed for the application.

-

Docker Registry is a storage and distribution service for Docker images. The most popular registry is Docker Hub, which provides a centralized repository for storing, sharing, and distributing Docker images. This is similar to Github where developers can pull and push containers for public use.

-

Docker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file to specify the services, networks, and volumes required for an application, allowing developers to define complex application architectures with ease.

The use of containers is very important and requires calmness of mind to master, as a result I have two more modules lined where I would walk you through hands on containerization with docker and subsequently deployment.

If you enjoyed this and want to complete the modules, give me a follow and watch out for module 2 of this series.